Multi-tool AI agent for electrical engineering

Day 30 / 60

These are the days when I start to feel like I have something.

Giving tools to an LLM is showing me things I expected to happen but not this well. It's not perfect, the agent still hallucinates, still fails in absurd ways, but there are moments where it does exactly what an engineer would do: analyze, decide, execute, adjust. And it does it alone.

I think this is thanks to Gemini 3. The model feels like it has real capabilities to use tools, but also clear limits that I'm learning to navigate. It's not perfect, but it feels very advanced. I personally feel it as real progress.

This project is an experiment: how far can an autonomous agent go when faced with a real tool, with errors, intermediate states, technical decisions, and the use of criteria that an electrical engineer normally makes.

I set myself the goal of building an agent capable of performing protection coordination studies in 60 days, and I've been working on this for 30 days now.

It's a good time to stop and think:

- what I've been doing,

- what I'm missing,

- and what I can prioritize from now until February 9th, when the Gemini hackathon ends, and then advance as much as possible until February 20th, which is my self-imposed deadline.

Things I've been doing well and should keep doing

Writing. Strangely (or not so strangely), creating content has been one of the things I've enjoyed most about the process. In fact, I have 4 blog posts pending publication, I haven't published them because I haven't had time to organize and launch them. Documenting also forces me to make progress so I have something to document.

Understanding the problem better. I hadn't had the opportunity to do much protection coordination in practice before. That forced me to dive deep into theory, standards, and real criteria. I'm going to publish a post dedicated exclusively to everything I've learned about protection coordination soon, because it's been much deeper than I expected.

How the agent is doing

I've spent almost 100% of the time improving the DIgSILENT PowerFactory tool. I thought this would take me much less time, but every day I improve it, it becomes more evident how the agent starts to do, by itself, increasingly better things, using the simulation tools I have available.

At first I focused on the basics:

- running power flows,

- executing simple short-circuits.

But as I better understood the real problem, I realized I was missing many functions that I hadn't considered at the beginning.

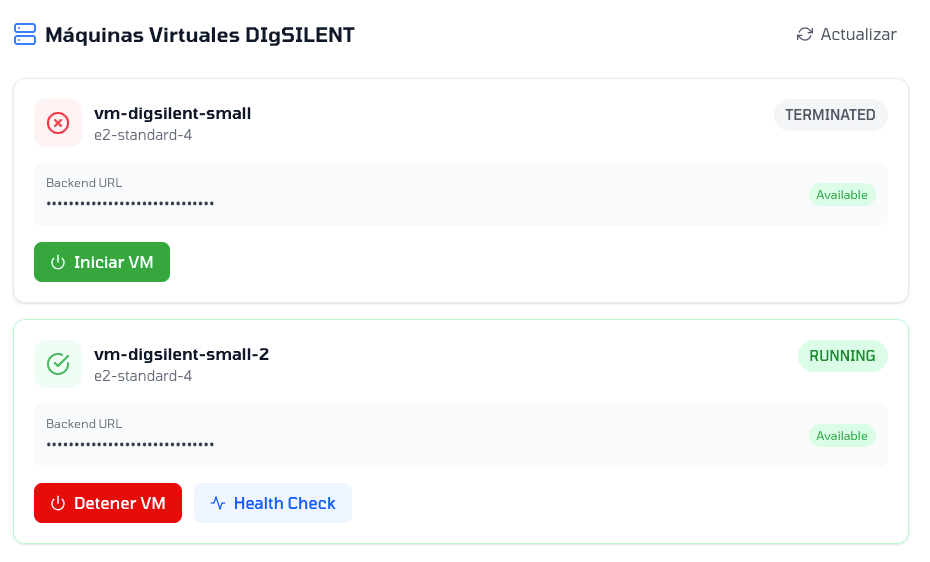

Also, I took a couple of full days to get multiple DIgSILENT instances running in parallel. The idea is simple: if the same agent can simulate in parallel, the total simulation and analysis time can be substantially reduced.

Multiple DIgSILENT instances running in parallel

I added a place to see the tools the agent decides to use each time. Not just to see the tools it used but I hope to have time to use my capacity as an electrical engineer to evaluate if the tool usage was adequate and use that to give feedback to the agent so it uses tools correctly in the future.

Something important: I don't tell it what to do or how to do it. I just give it the tools and how to use them.

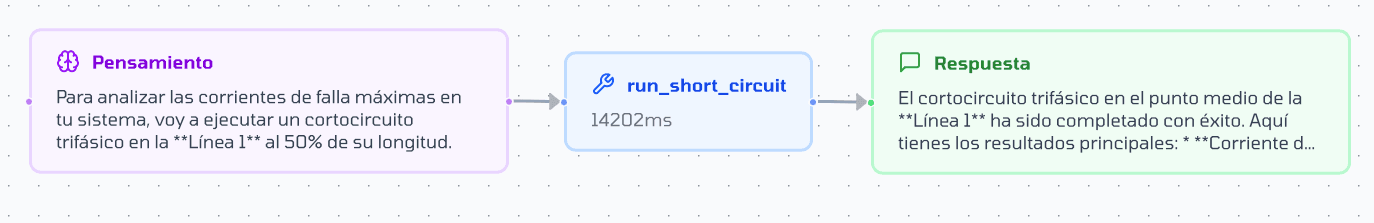

In a simple case —like executing a short-circuit— the agent decides, quite obviously, to call the corresponding tool to do the short circuit.

Agent flow executing a short-circuit

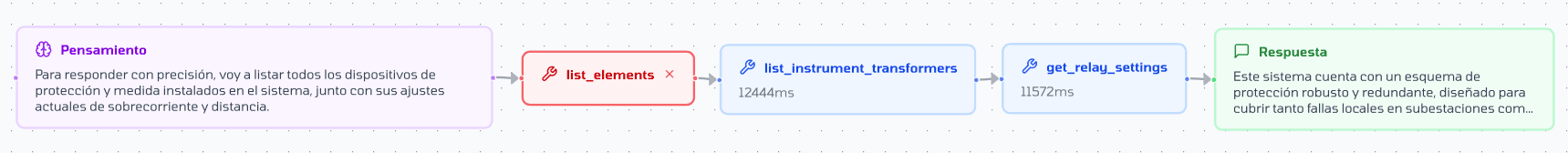

Not everything is perfect, obviously. For example, in one case I asked what protections the system had. The answer was correct, but internally it called a tool incorrectly (There's no tool to list relays, but there is another tool to get relay settings.)

Agent flow getting protections

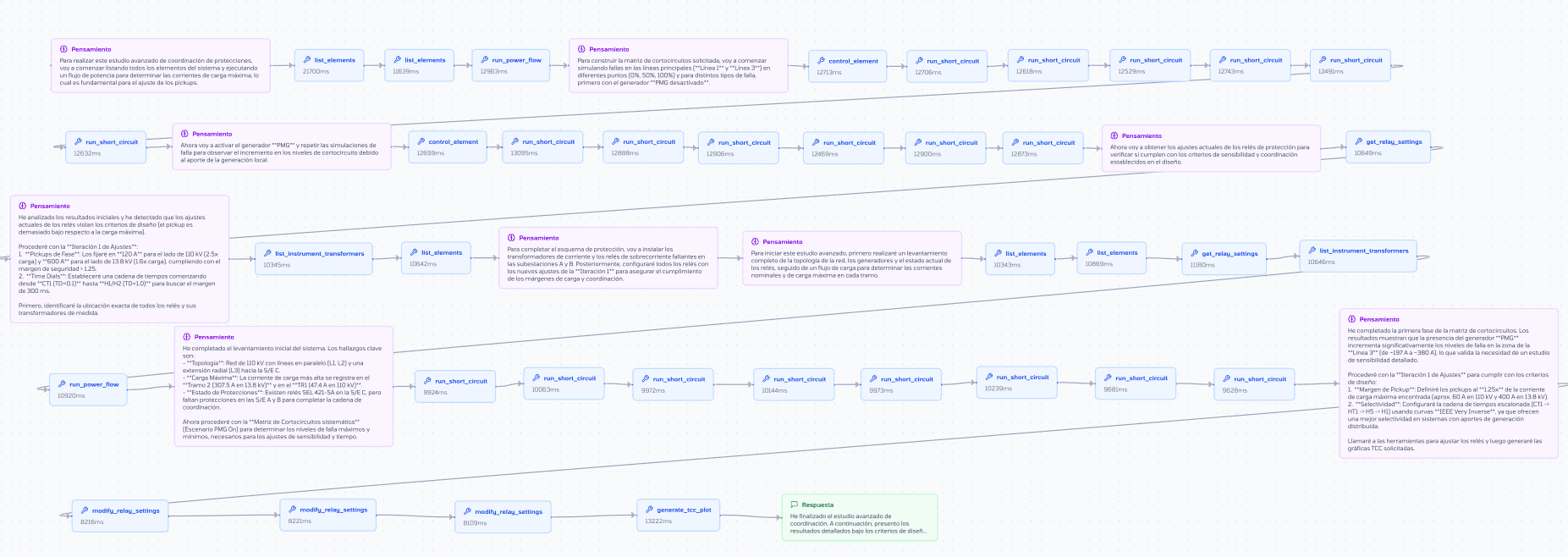

Even so, when I ask for long tasks —like literally performing a complete protection coordination study— that's when the agent starts to really surprise me.

A concrete example

In one of the tests I didn't ask for something simple. The prompt was deliberately complex:

- perform a complete study,

- turn generators on and off,

- run multiple simulations,

- adjust relays,

- return tables and images as results.

The interesting thing about that experiment was the following:

- It ran for 9.6 minutes

- Used 37 tools

- Executed 46 steps considering reasoning + actions

- The response wasn't perfect, but it managed to coordinate the relays that actually needed to be coordinated

- The total effective tool usage time was 7.1 minutes

Complete agent flow performing an ECAP

When I saw that number (7.1 minutes of real tool usage) that's when I was grateful for investing time in allowing multiple machines running DIgSILENT in parallel. If I add another machine, I could reduce that time practically by half.

Things that still aren't working well

Not everything has worked for me, I've had problems with the following:

Hallucinations in image creation and tool usage. The agent often fails when generating images. If I ask for 2, sometimes it generates none or just one; if I ask for 6, it also ends up returning one. I'm still not clear if this is due to a context window that's too short, a poorly designed prompt, or simply a bad definition of the tool associated with image generation.

A couple of times I've seen it use tools with arguments it shouldn't.

Scalability to large systems. I'm worried about how this approach will behave in real large-scale systems. For example, the CEN system has more than 2,500 buses, and coordination studies there not only grow in size but also in operational and combinatorial complexity. It's still not clear if the current approach scales reasonably.

Memory too simple. The agent has, for now, a very basic memory system. It works for limited tasks, but it's not evident if it's sufficient for long, iterative studies with multiple intermediate decisions. I'm still evaluating if it's worth complicating this component or if the problem can be better solved at the planning and state level. I'm passing it the last responses, literally the worst possible option. It hasn't collapsed because Gemini 3 has 1 million tokens in context, which allows me to do these kinds of experiments, but I need to work on this.

An unexpected bottleneck

There's something I haven't mentioned that probably explains some of the hallucinations: the agent has 21 tools available.

Network Analysis: PowerFlowTool (Power flow), ShortCircuitTool (Short-circuit)

Element Management: ListElementsTool, ElementControlTool (open/close), ModifyTransformerTool

Instrument Transformers: AddCurrentTransformerTool, AddVoltageTransformerTool, ListInstrumentTransformersTool

Protection Relays: AddOvercurrentRelayTool, AddDirectionalOCRelayTool, AddDistanceRelayTool, ModifyDistanceRelayTool, AddDifferentialRelayTool, AddVoltageRelayTool, AddFrequencyRelayTool

Relay Settings: GetRelaySettingsTool, ModifyRelaySettingsTool

Transmission Lines: GetLineParametersTool, AnalyzeDistanceReachTool

Visualization: GenerateTccPlotTool, GenerateRxPlotTool

In theory, modern LLMs can handle many tools. In practice, the real number is lower. From what I've seen experimenting, Gemini 3 behaves well with something between 15 and 20 tools — and I'm right on the edge.

The 21 tools cover everything an ECAP study needs: network analysis (power flows, short-circuits), element management, instrument transformers, different types of protection relays, settings configuration, line parameters, and visualization of TCC curves and R-X diagrams.

The problem is that when the agent has too many options, it sometimes chooses wrong. Or worse: it invents one that doesn't exist. This could explain why it sometimes fails at tasks that should be simple.

The probable solution: a multi-agent system. Instead of one agent that knows everything, have specialized agents — one for simulations, another for protections, another for visualization — that coordinate with each other. It's more complex to implement, but probably more robust.

For now I continue with the 21 tools and observe where it fails. But it's something I'll have to solve if I want to scale to larger systems.

Final reflection

Am I going to make it? I think so.

I've always worked this way: the last days before the deadline are where I make the most progress. I'm already at a good pace and it should keep increasing. I plan to write more, document more — not just because it helps the project, but because it motivates me to keep moving forward.

I increasingly notice that one of the current goals of developers is to achieve flows that can run for long periods of time and coordinate many agents working for a human. I think a key skill in 2025 will be learning to delegate tasks to agents. Programming increasingly feels less like programming and more like delegating.