Automated power flow analysis with artificial intelligence

Day 33 / 60

Today, after several days of work, the agent delivered a complete power flow study.

Study cover

I didn't tell it how to do it. I just gave it the tools and a ridiculously simple prompt:

Run a power flow study of the system with the case as is and another with the PMG generator on, give me the different diagrams for the different scenarios and in each case, with or without generator give me different contingencies, (a line goes down or you increase generation) and generate a PDF report._diagram_clean.png&w=3840&q=75)

Base case single-line diagram

The system I analyzed is small: 7 buses. A toy system, basically. But what the agent did surprised me.

The numbers

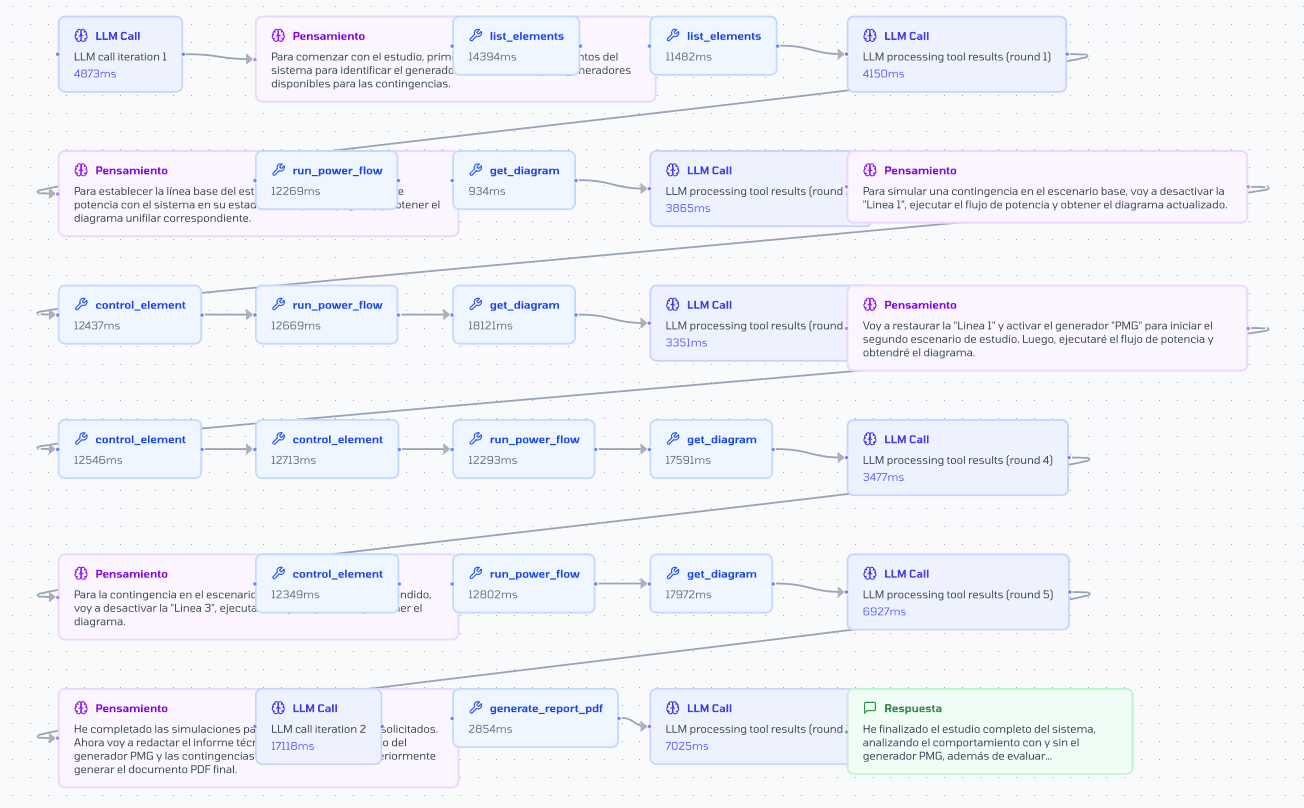

The agent took 10 minutes. It used 26 tools in sequence. It ran 4 different contingency analyses: turned the PMG generator on and off, and in both scenarios removed a line to see how the system behaved.

Total cost: $0.149 USD.

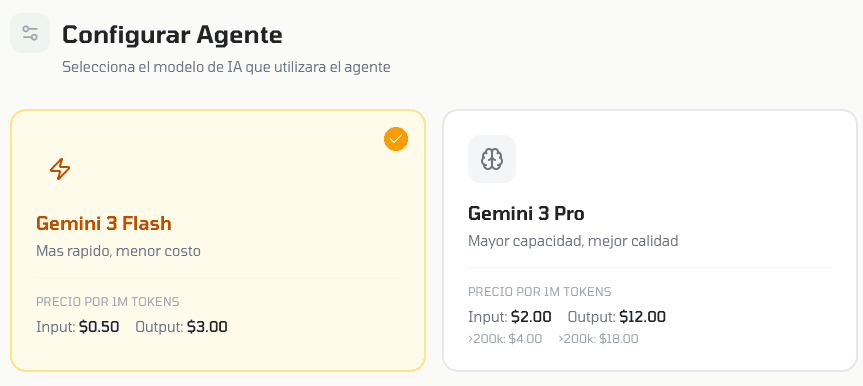

This is with Gemini 3 Flash, not the smartest version. With Gemini 3 Pro I estimate it could easily spend more than $1 USD. I already added a view in D.N to change the model on the fly—I did some tests with Pro but it failed in strange ways. When I have the agent more polished, I'll test again.

Agent settings view

What surprises me

I insist: I don't tell it how to do it. I just give it the tools.

I'm surprised how well it works and makes decisions considering how vague my prompt is. There's something there that reminds me of what I wrote in the 50% post: "the agent decides, executes, adjusts. And it does it alone."

Now, the report is deficient. It feels written by a Junior, maybe less than a Junior. I could write something much better. But that's not the point yet.

The point is that the agent did the technical work. It ran the simulations, analyzed contingencies, generated the data. The report is just the presentation layer—and that's the easy part to improve.

Agent flow for writing a power flow

How I'm generating reports (for now)

I have experience doing this type of studies manually. Right now I only have 2 tools dedicated to the report:

- A tool that extracts the information and diagrams generated by all the other tools

- Another tool that takes that information and writes the PDF with tables and images

It's not the best architecture, I know. It's what I managed to put together so far. In the coming days I'll separate this logic better—I probably need a specialized agent just for writing and formatting.

The 30 tools problem

I've reached 30 tools in the agent and that worries me.

In the previous post I mentioned that Gemini 3 behaves well with something between 15 and 20 tools. With 21 I was already on the edge. With 30, I'm clearly over.

This explains some weird behaviors I've seen: the agent sometimes chooses incorrect tools or invents arguments that don't exist. It's the same problem I documented before, but amplified.

The coming change: sub-agents

The solution is obvious but I'm a bit scared to implement it: multi-agent architecture.

Instead of one agent that knows everything, I'll have specialized agents:

- An agent for simulations (power flows, short-circuits)

- An agent for protections (relay configuration)

- An agent for visualization and reports

Each with a maximum of 15 tools. The main agent coordinates and delegates.

The additional advantage is that this would allow me to parallelize scenarios. The main agent could tell a sub-agent to analyze a specific contingency while another analyzes another. Instead of 26 sequential tools in 10 minutes, it could be half the time.

I'm worried about this change because until now the simple ReAct pattern was working perfectly for me. One agent, one loop, one decision at a time. I was totally convinced.

But the numbers don't lie: 30 tools is too many. And if I want to scale to real CEN systems with more than 2,500 buses, I need an architecture that supports that complexity.

Reflection

Every day that passes I see more clearly that this is going to work. Not because the agent is perfect—it's not. The report it generates is mediocre, hallucinations still appear, and the switch to multi-agent is going to cost me time.

But there's something fundamental that's already working: the agent is making real technical decisions, running real simulations, analyzing real contingencies. The engineer's judgment is starting to emerge from the tools I gave it.

That's what matters. The rest is polishing.